Gray-Level Re-Rendering and the Psychophysical Experiments

We conducted two psychophysical experiments for the semantic gray-level re-rendering.

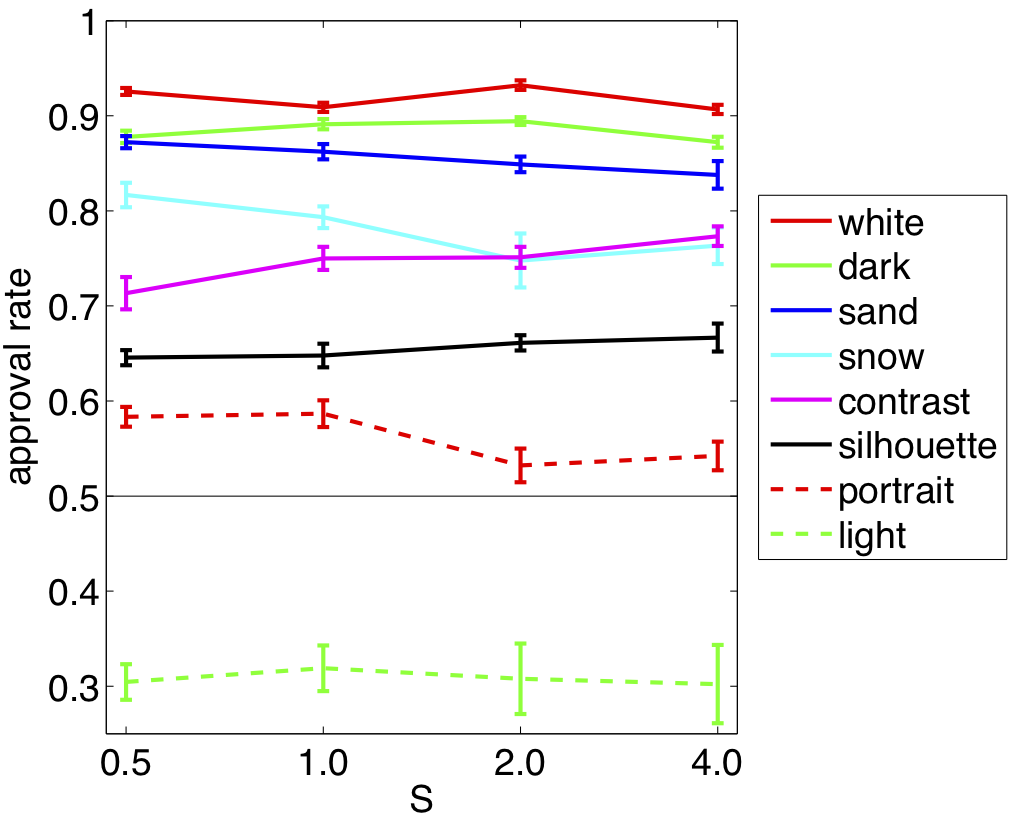

1. The first psychophysical experiment was a large-scale study on Amazon Mechanical Turk where we compared original images against their semantically enhanced versions. The observers saw the two versions of the image next to each other together with the keyword in the title. They were asked to select the image that matches best the keyword. We tested:

- 8 semantic classes (i.e. keywords)

- 30 images for each class

- 4 versions with different values for the parameter S for each image

- 30 observers for each version

This totals to 8*30*4*30 = 28'800 pairwise comparisons.

This overview shows all images and results that we obtained for S=1.

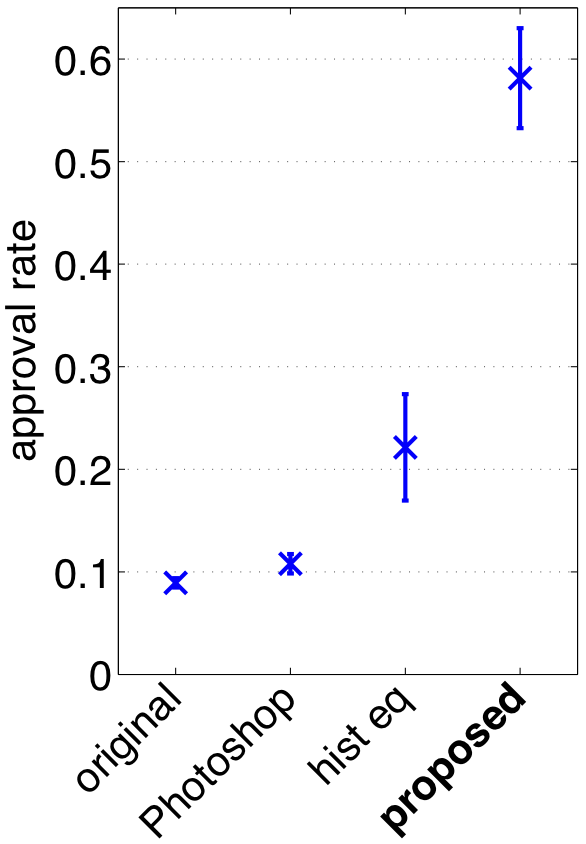

2. In the second psychophysical experiment the observers saw

- the original image

- a version after our semantic gray-level re-rendering

- a version after Photoshop's auto contrast

- a version after a histogram equalization

and had to select the image that best matches the given keyword. For this study, we took all images from the previous study that were annotated with at least two different of the eight keywords. With this psychophysical experiment we proof that our semantic adaption brings great advantage over the other tested methods.

This overview shows all images and results.

Results from two psychophysical experiments. Left: Approval rates from 30 observers for different S values. Images of all except one semantic concepts are enhanced with success rates of up to 93%. The approval rate for light jumped to 62% in an additional experiment where we invited only artists. Right: Approval rate from 40 observers comparing the proposed method against other contrast enhancement methods (histogram equalization and Photoshop's auto contrast function). The proposed method scores more than 2.5 times better than the 2nd best. The error bars in both figures show the variances across different images.